Creator Michel Janse says she was on her honeymoon when she learned that her likeness was being used to promote erectile-dysfunction pills online.

“People had messaged me, saying, ‘Hey, I saw this ad, and it didn’t look like you, but it was you,’” she told Marketing Brew. “It was me, in my clothes, in my bedroom, but I didn’t do an ad there.”

Janse says the ad, which she briefly showed on her TikTok account, was a deepfake, and depicted Janse talking about her husband “Michael” and his issues with ED. The ad pulled visuals from a 33-minute video about her divorce that she posted to YouTube a year ago, which she called “by far the most personal thing I’ve ever shared.” That, along with the fact that the link in the ad directed to content that she described as “basically pornographic,” made the experience all the more violating, Janse told us.

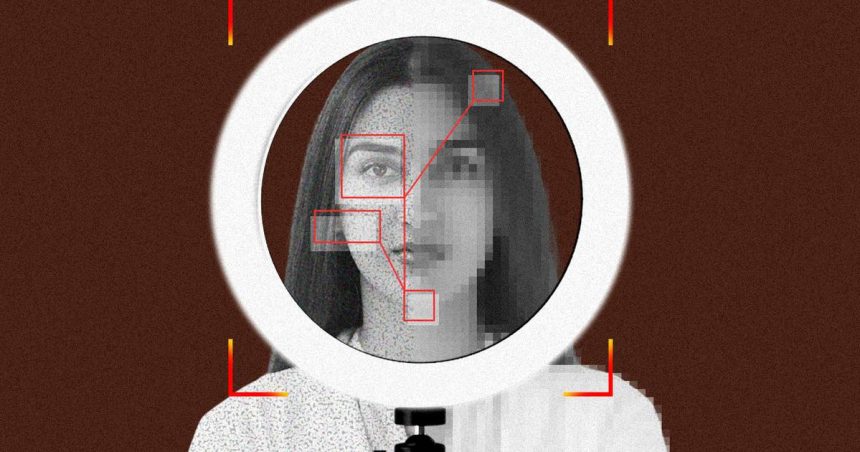

AI-generated deepfakes are a new reality, and one that creators like Janse, Ida Giancola, and MrBeast, as well as celebrities like Jennifer Aniston, Taylor Swift, and Tom Hanks, are all grappling with.

“With celebrities, this is a real issue right now because anybody anywhere can take the likeness of somebody and use it,” Rahul Titus, global head of influence at Ogilvy, told us. “With the way social media works and the spread of fake news, the damage potential is endless.”

There are some state-level laws regulating and limiting deepfakes, including in California and Illinois, and lawmakers have introduced some federal-level legislation, while the FCC and FTC have also moved to ban some deepfake usage. Meanwhile, Google, Meta, and TikTok have all introduced guidelines for labeling and limiting AI-generated deepfakes ahead of this year’s elections. Some brands have also weighed in: Steak-umm created a video called “DeepSteaks” with doctored focus group testimonials to help advocate for the Deep Fakes Accountability Act.

But federal regulation still hasn’t been passed—and legislation doesn’t often move at the same pace as technological developments.

“Looking back, I probably should have been more shocked or concerned than I was,” Janse said. “But when you hear so much about what can happen on the internet, I’ve kind of been mentally preparing for my turn.”

“We’re living in a Black Mirror episode”

Concerns around deepfakes are mounting at the same time as brands are experimenting with the use of authorized AI-generated likenesses. In 2018, Cara Delevingne worked with German retailer Zalando to create more than 290,000 localized ads featuring her likeness, and in 2021, Bollywood star Shah Rukh Khan partnered with Cadbury Celebrations and Ogilvy India on a campaign where small-business owners could generate ads where a likeness of Khan mentioned their business by name. Last year, Queen Latifah partnered with Lenovo on a similar campaign, and Meta reportedly paid celebrities like Kendall Jenner and Tom Brady handsomely for permission to use their likenesses as part of its AI Personas.

“As [AI] takes hold of influencer marketing and creative industries, you’ve got two options: you either embrace it or you fight it,” Titus said.

But some brands have been pushed into the conversation around AI without actually using AI-generated likenesses in their own marketing. Le Creuset, for example, was promoted in videos featuring deepfakes of stars like Selena Gomez and Taylor Swift appearing to offer giveaways of the cookware in what ended up being a scam. A statement shared with Marketing Brew by Lindsey White, a press representative for Hunter PR, said Le Creuset was “not involved with Taylor Swift for any consumer giveaway,” and pointed users to its official social channels to confirm the veracity of promotions or giveaways.

Get marketing news you’ll actually want to read

Marketing Brew informs marketing pros of the latest on brand strategy, social media, and ad tech via our weekday newsletter, virtual events, marketing conferences, and digital guides.

Some companies are looking to monitor social media to track unauthorized AI-generated uses of their brands. Last year, General Mills enlisted verification service Zefr to help identify instances of user-uploaded, AI-generated content involving the brand. Zefr CCO Andrew Serby thinks the ad industry will reach a point where it looks to determine “some sort of common agreement governing AI use and enforcement,” similar to the GARM Brand Safety and Suitability Framework.

When asked if he had any tips for brands as deepfake videos—like the ones involving Le Creuset—pop up, he encouraged them to develop action plans and “structured policies” on generative AI. Otherwise “it’s going to be Whac-a-mole” trying to play catch up as AI-generated content spreads, he said.

For those whose likenesses are used without permission, as of now, there’s little they can do beyond suing a company or person that uses their likeness, Wasim Khaled, CEO and co-founder of Blackbird.AI, a narrative and risk intelligence service, told us.

For Janse, that option doesn’t inspire much hope. “If celebrities have dealt with this and haven’t been able to make a change, I hate to say I’m pessimistic about there being a change anytime soon, but I am,” she said.

Trust what’s real

What’s helped Janse get through this is knowing that her followers were quick to identify the deepfake as being very unlike her typical content.

“I have some confidence in people being wise to what’s going on these days,” she said. “I know a lot of people are susceptible to falling for things like that, but my initial gut instinct was, ‘It’s okay, it’s gonna be fine. People know I wouldn’t do this, people know I’m not married to a man named Michael.”

Overall, Titus acknowledged the importance of more consumer education on what’s fake and what’s real. One way to do that, he suggested, is to require brands to “declare and disclose” all AI content, though he said that may not happen overnight.

‘It took a while before it became mainstream to have paid disclosure around influencer content,” he said. “I would assume it’s going to take some time for us to get there.”

Without clear labeling, Titus said brands, creators, and celebrities alike risk losing audience trust. But even with those risks, he said, “there are still some real advantages” to using AI-generated likenesses for those groups, because of the ability to hyperpersonalize messaging.

As Janse waits to see more regulations and disclosure requirements for AI content, she said this incident has prompted her to rethink whether she’ll post her kids online after she becomes a parent or whether she’d be comfortable agreeing to let a company use her likeness.

“If you would have asked me a couple months ago, I would have been like, ‘If I felt safe about it, and if it was a life-changing amount of money, I would consider it,’” she said. “Now, having seen the dark side of things, I would really, really, really need to do my due diligence.”

Read the full article here